Properties of polynomial roots

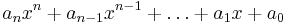

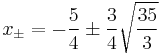

In mathematics, a polynomial is a function of the form

where the coefficients  are complex numbers and

are complex numbers and  . The fundamental theorem of algebra states that polynomial p has n roots. The aim of this page is to list various properties of these roots.

. The fundamental theorem of algebra states that polynomial p has n roots. The aim of this page is to list various properties of these roots.

Contents |

Continuous dependence of coefficients

The n roots of a polynomial of degree n depend continuously on the coefficients. This means that there are n continuous functions  depending on the coefficients that parametrize the roots with correct multiplicity.

depending on the coefficients that parametrize the roots with correct multiplicity.

This result implies that the eigenvalues of a matrix depend continuously on the matrix. A proof can be found in Tyrtyshnikov(1997).

The problem of approximating the roots given the coefficients is ill-conditioned. See, for example, Wilkinson's polynomial.

Complex conjugate root theorem

The complex conjugate root theorem states that if the coefficients of a polynomial are real, then the roots appear in pairs of the type a ± ib.

For example, the equation x2 + 1 = 0 has roots ±i.

Radical conjugate roots

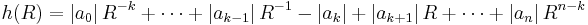

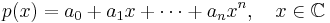

It can be proved that if a polynomial P(x) with rational coefficients has a + √b as a root, where a, b are rational and √b is irrational, then a − √b is also a root. First observe that

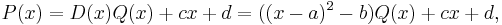

Denote this quadratic polynomial by D(x). Then, by the division transformation for polynomials,

where c, d are rational numbers (by virtue of the fact that the coefficients of P(x) and D(x) are all rational). But a + √b is a root of P(x):

It follows that c, d must be zero, since otherwise the final equality could be arranged to suggest the irrationality of rational values (and vice versa). Hence P(x) = D(x)Q(x), for some quotient polynomial Q(x), and D(x) is a factor of P(x).[1]

Bounds on (complex) polynomial roots

Based on the Rouché theorem

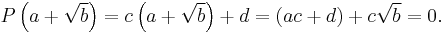

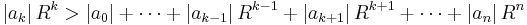

A very general class of bounds on the magnitude of roots is implied by the Rouché theorem. If there is a positive real number R and a coefficient index k such that

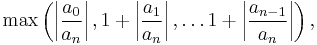

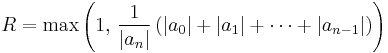

then there are exactly k (counted with multiplicity) roots of absolute value less than R. For k=0,n there is always a solution to this inequality, for example

- for k=n,

-

or

or

- are upper bounds for the size of all roots,

- for k=0,

-

or

or

are lower bounds for the size of all of the roots.

- for all other indices, the function

-

- is convex on the positive real numbers, thus the minimizing point is easy to determine numerically. If the minimal value is negative, one has found additional information on the location of the roots.

One can increase the separation of the roots and thus the ability to find additional separating circles from the coefficients, by applying the root squaring operation of the Dandelin-Graeffe iteration to the polynomial.

A different approach is by using the Gershgorin circle theorem applied to some companion matrix of the polynomial, as it is used in the Weierstraß–(Durand–Kerner) method. From initial estimates of the roots, that might be quite random, one gets unions of circles that contain the roots of the polynomial.

Other bounds

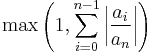

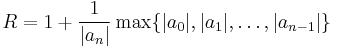

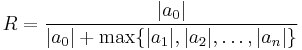

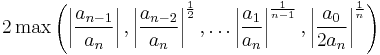

Useful bounds for the magnitude of all polynomial's roots [2] include the near optimal Fujiwara bound

-

(Fujiwara's bound),

(Fujiwara's bound),

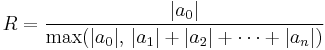

which is an improvement (as the geometric mean) of

-

(Kojima's bound)

(Kojima's bound)

Other bounds are

or

Gauss–Lucas theorem

The Gauss–Lucas theorem states that the convex hull of the roots of a polynomial contains the roots of the derivative of the polynomial.

A sometimes useful corollary is that if all roots of a polynomial have positive real part, then so do the roots of all derivatives of the polynomial.

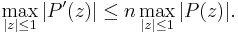

A related result is the Bernstein's inequality. It states that for a polynomial P of degree n with derivative P′ we have

Polynomials with real roots

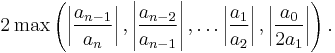

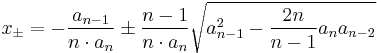

If  is a polynomial such that all of its roots are real, then they are located in the interval with endpoints

is a polynomial such that all of its roots are real, then they are located in the interval with endpoints

.

.

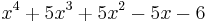

Example: The polynomial  has four real roots -3, -2, -1 and 1. The formula gives

has four real roots -3, -2, -1 and 1. The formula gives

,

,

its roots are contained in

- I = [-3.8117 ; 1.3117].

See also

- Descartes' rule of signs

- Sturm's theorem

- Abel–Ruffini theorem

- Viète's formulas

- Gauss–Lucas theorem

- Content (algebra)

- Rational root theorem

- Halley's method

- Laguerre's method

- Jenkins-Traub method

Notes

- ^ S. Sastry (2004). Engineering Mathematics. PHI Learning. pp. 72–73. ISBN 8120325796.

- ^ M. Marden (1966). Geometry of Polynomials. Amer. Math. Soc.. ISBN 0821815032.

References

- E.E. Tyrtyshnikov, A Brief Introduction to Numerical Analysis, Birkhäuser Boston, 1997

![\left(x - \left [ a %2B \sqrt b \right ] \right) \left(x - \left [ a - \sqrt b \right ] \right) = (x - a)^2 - b.](/2012-wikipedia_en_all_nopic_01_2012/I/07b14c260ce5b4f8ac3bc66ee5c15c4e.png)